Background: Sometimes we need to insert large number of rows to the database. (For example: transferring data from external source to our database). It is preferable that performance is fast and the expected time to finish is proportional to the number of rows to be inserted.

If you’re doing much more frequent imports, with heavy performance requirements using millions to hundreds of millions of rows, you’ll likely want to look at another tool more specifically targeted for batch/ETL processes or writing something directly in Java. For smaller jobs, you might be surprised how far you can get with groovy and grails.

System Requirement:

- Grails version : 2.4.0

- RAM : 8GB

- Processor : i3

Traditional approach to save object: Let’s assume that we’ve created a Grails domain class called ‘Person’ to represent the table we’re importing into, and insert 50000 records. You might be try something like this:

Domain class

String firstName

String lastName

String address

}

Note: saveRecords() method must be added in grails service.

(1..50000).each { counter ->

Person person = new Person()

person.firstName = “Jone”

person.lastName = “Roy”

person.address = “New york”

person.save(flush: true,failOnError: true)

}

}

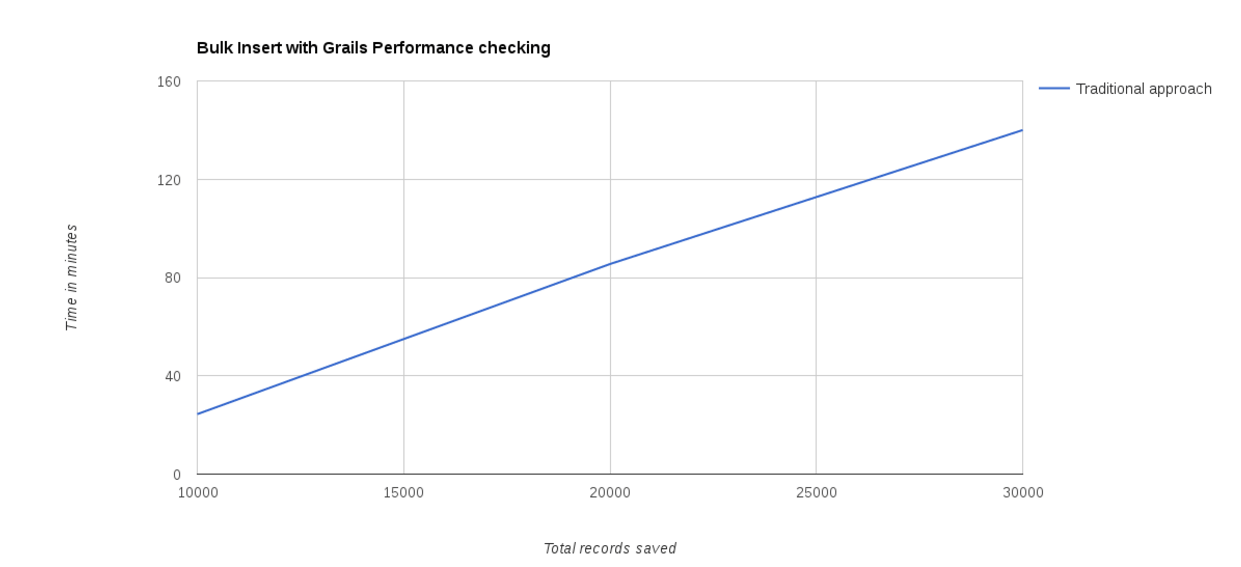

| Total Record saved | |||||

|---|---|---|---|---|---|

| 10000 | 20000 | 30000 | 40000 | 50000 | |

| Time (mins) | 24.4 | 85.49 | 140 | Out Of Memory Exception | |

Unfortunately, you would find this code to run very slowly and you might even run out of memory. It takes 140 minutes to import 30k records that can’t be right.This is because Grails is caching at two different levels, causing locking and a potential memory leak. So it’s better to try a different approach.

Solution 1 :

Using GORM cleanup: When creating a new domain object , always do flush() and then clear() the session regularly in order to control the size of the first-level cache.

(1..50000).each { counter ->

Person person = new Person()

person.firstName = “Jone”

person.lastName = “Roy”

person.address = “New York”

person.save(flush: true,failOnError: true)

if(counter.mod(100)==0) {

cleanUpGorm()

}

}

}

/*** GORM cleanup method***/

def cleanUpGorm() {

def session = sessionFactory.currentSession

session.flush()

session.clear()

propertyInstanceMap.get().clear()

}

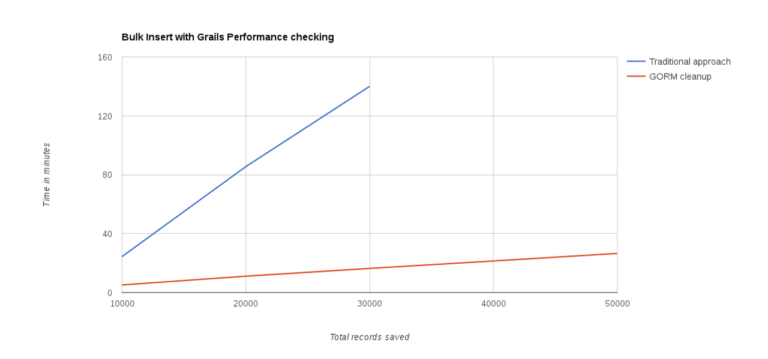

| Total Record saved | |||||

|---|---|---|---|---|---|

| 10000 | 20000 | 30000 | 40000 | 50000 | |

| Time (mins) | 5.15 | 11.1 | 16.37 | 21.43 | 26.55 |

Invoke Session.flush() + Session.clear() controls the end of the batch update, sends SQL statements to the RDBMS and remove all objects from the Hibernate session cache. It takes 26 minutes to save 50K records and it much better than simple GORM save method

Solution 2 :

Batch Processing:

Session session = sessionFactory.openSession();

Transaction tx = session.beginTransaction();

(1..50000).each { counter ->

Person person = new Person()

person.firstName = “John”

person.lastName = “Roy”

person.address = “New York”

session.save(person)

if(counter.mod(100)==0) {

//clear session and save records after every 100 records

session.flush();

session.clear();

}

}

tx.commit();

session.close();

}

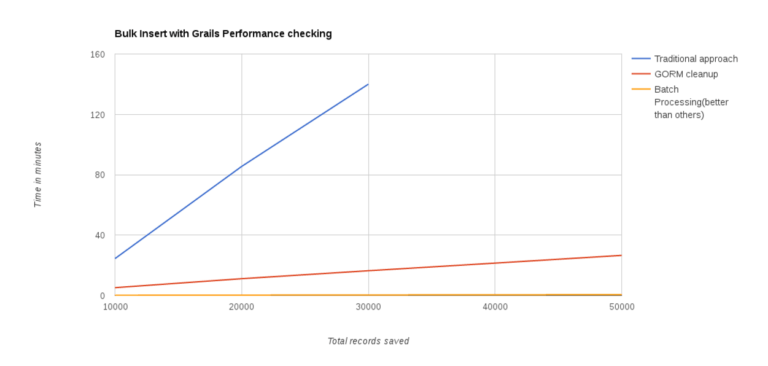

| Total Record saved | |||||

|---|---|---|---|---|---|

| 10000 | 20000 | 30000 | 40000 | 50000 | |

| Time (mins) | 0.14 | 0.24 | 0.34 | 0.43 | 0.54 |

Batch Processing allows you to group related SQL statements into a batch and submit them with one call to the database. When you send several SQL statements to the database at once, you reduce the amount of communication overhead, thereby improving performance.Batch processing takes 0.54 minute to save 50K records. It’s amazing!!

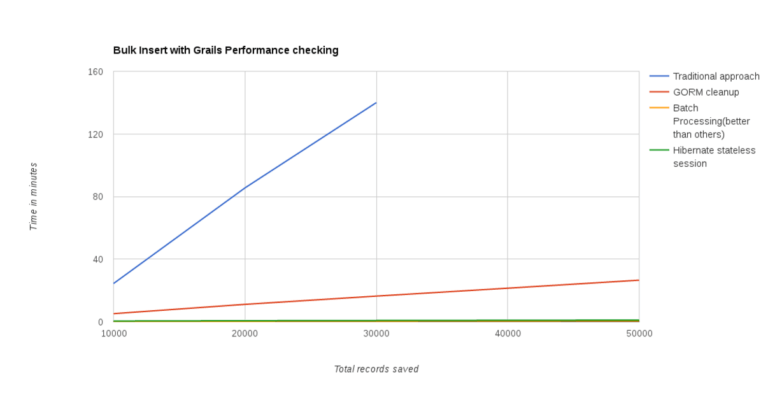

Solution 3 :

Hibernate StatelessSession: If you prefer to stick with GORM, you can. You’ll just need to compensate for Grails’ and Hibernate caching mechanisms, by pausing to clear the caches after every 20 records, instead of having to worry about clearing the Hibernate cache, you can open a stateless database session. It takes 1.07 minutes to save 50K records but not better than batch processing.

StatelessSession session = sessionFactory.openStatelessSession()

Transaction tx = session.beginTransaction();

(1..50000).each {counter ->

Person person = new Person()

person.firstName = “John”

person.lastName = “Roy”

person.address = “New York”

session.insert(person)

}

tx.commit();

session.close();

}

| Total Record saved | |||||

|---|---|---|---|---|---|

| 10000 | 20000 | 30000 | 40000 | 50000 | |

| Time (mins) | 0.44 | 0.65 | 0.79 | 0.93 | 1.07 |

Additional methods to improve performance:

Disable the second level cache of Hibernate

hibernate {

cache.use_second_level_cache = false

cache.use_query_cache = false

cache.provider_class = ‘net.sf.ehcache.hibernate.EhCacheProvider’

}

Avoid redundant checks, especially those that imply in additional access to the database

and // grails-app/conf/Config.groovy grails.gorm.failOnError=true

Conclusion: This post is just some of what can be done to improve batch performance with Grails and MySQL. Using these tips, I was able to speed up my own imports to a level that met the speed requirements for our problem space, but kept our code much more maintainable. Our domain object remain the canonical code representation of our database model.